Introduction

Welcome everyone ,

This blog details about the performance wise comparison between DeepseekAI and ChatGPT

Chat GPT, developed by OpenAI, is an advanced conversational model designed to generate human-like text responses based on user prompts. Its transformer model architecture allows it to understand and generate text with coherence. This makes it relevant and valuable for natural language processing. It is also important for customer service automation and content generation. Chat GPT excels in dialogue engagement and contextually appropriate responses, making it an essential tool for exploring AI in communication. One of its key aspects is the speed and efficiency of response generation, optimized for real-time interactions, which is crucial for live chat systems and interactive user interfaces. The pricing models for Chat GPT cater to various organizational needs, offering subscription-based access through its API, allowing businesses to scale usage cost-effectively. However, transparency regarding its training data and potential biases remains a concern for researchers, despite OpenAI’s efforts to address these issues.

Deep SeekAI represents a significant advancement in AI technology, focusing on enhancing information retrieval and response generation capabilities. Unlike traditional AI models, Deep SeekAI excels in understanding and processing vast amounts of data, making it particularly useful for researchers requiring precise and comprehensive data analysis. Its deep learning-based architecture allows it to learn from extensive datasets and adapt to various research domains. Deep SeekAI stands out with its response generation speed and efficiency, leveraging advanced caching techniques and optimized algorithms that significantly reduce response times. This efficiency is beneficial for dealing with large datasets or complex queries. The cost and pricing models for Deep SeekAI are designed to accommodate different organizational needs, with flexible pricing tiers and transparency in cost estimation. Researchers also benefit from the model’s transparency regarding its training data, providing insights into dataset composition and encouraging informed usage.

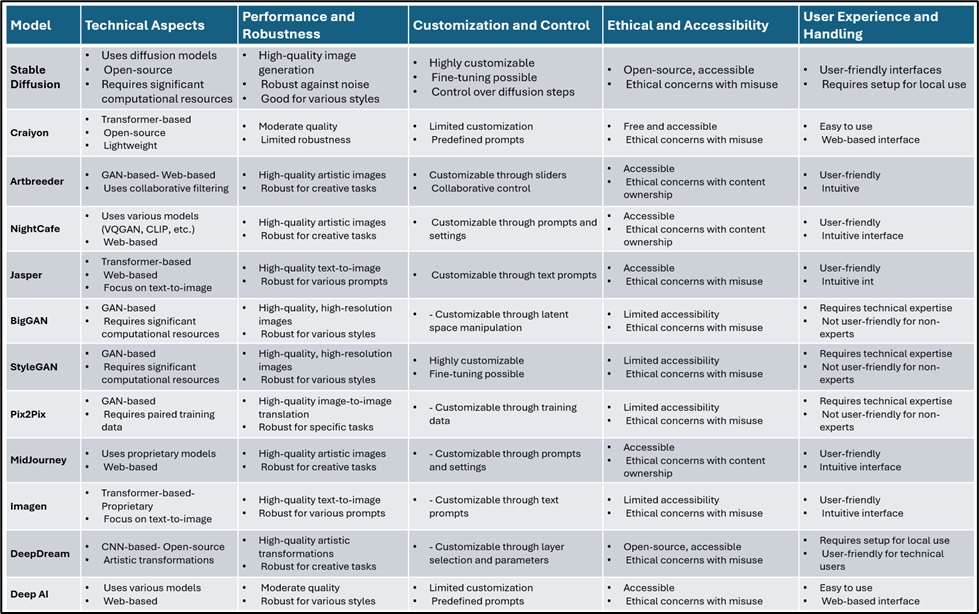

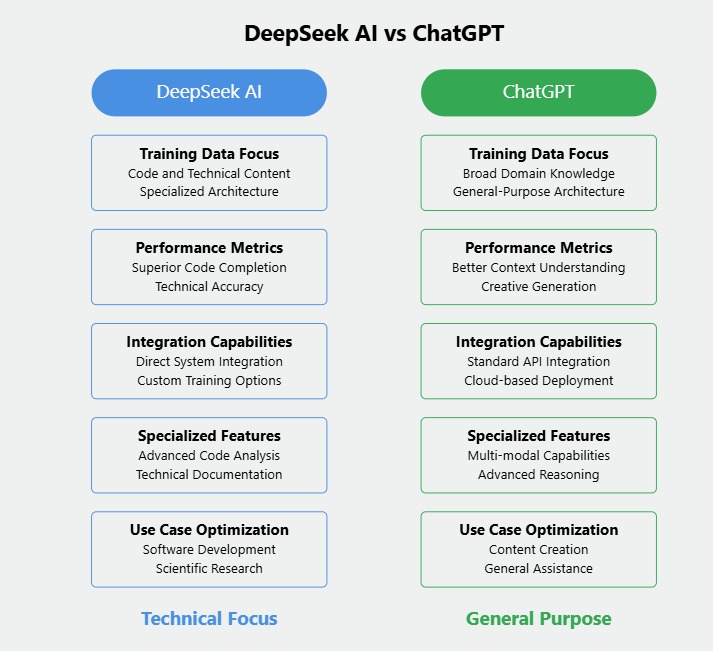

The below figure represents the main attributes that differentiate each model from one another

Analysis

Response Generation Speed and Efficiency

Definition of Response Generation Speed

Response generation speed is the time an AI model takes to produce a response after receiving a prompt. It’s crucial for applications requiring real-time interaction, impacting user experience and practical deployment. Factors influencing speed include model complexity, training data size, and computational resources.

In practical applications, fast response times enhance user satisfaction, especially in customer service or interactive learning environments. Organizations must consider the cost implications, as faster response times may require more advanced infrastructure.

The quality and transparency of training data also affect response speed and overall effectiveness. Transparent training processes build trust and improve adoption rates.

Benchmarks for Measuring Efficiency

Efficiency benchmarks for AI models like Chat GPT and Deep SeekAI include response speed, accuracy, resource utilization, and cost-effectiveness. Researchers compare models based on average response times under various conditions and cost models.

Training data quality and transparency are also critical. Researchers assess models on training datasets size, source, and variety, as well as the transparency of training processes.

By establishing clear benchmarks, researchers can make informed decisions about which model best suits their needs, balancing speed, cost, and data transparency.

Performance Analysis of Chat GPT and Deep SeekAI

Chat GPT

Chat GPT excels in generating coherent and contextually relevant responses across a variety of topics, making it ideal for conversational applications. Its transformer model architecture allows it to maintain context over extended dialogues, enhancing user experience. However, it can sometimes produce inaccuracies or exhibit biases reflective of its training data.

In terms of response generation speed, Chat GPT performs well under moderate load conditions, delivering real-time responses suitable for immediate feedback applications. However, its performance can fluctuate depending on input complexity and deployment environment. Cost and pricing models vary, with options catering to different usage patterns and deployment strategies, appealing to startups and smaller enterprises.

Training data and model transparency are crucial for understanding Chat GPT’s performance and reliability. The model is trained on diverse internet text, contributing to its versatility. However, the lack of transparency regarding specific datasets and performance metrics can raise concerns about reliability and ethical use.

DeepseekAI

Deep SeekAI is notable for its ability to generate contextually relevant responses across various domains, making it beneficial for academic and technical fields. Its advanced algorithms improve contextual comprehension, providing more accurate and relevant outputs.

Deep SeekAI demonstrates impressive performance in response generation speed and efficiency, particularly in processing long queries or multi-turn conversations. This efficiency is vital for researchers relying on quick access to information. Cost and pricing models are flexible, catering to various organizational needs, from startups to large enterprises.

The model’s training data, which includes academic literature and technical documents, enhances its ability to generate informed responses relevant to specialized fields. Model transparency is a strong point for Deep SeekAI, providing insights into its algorithms and decision-making processes, fostering trust among users.

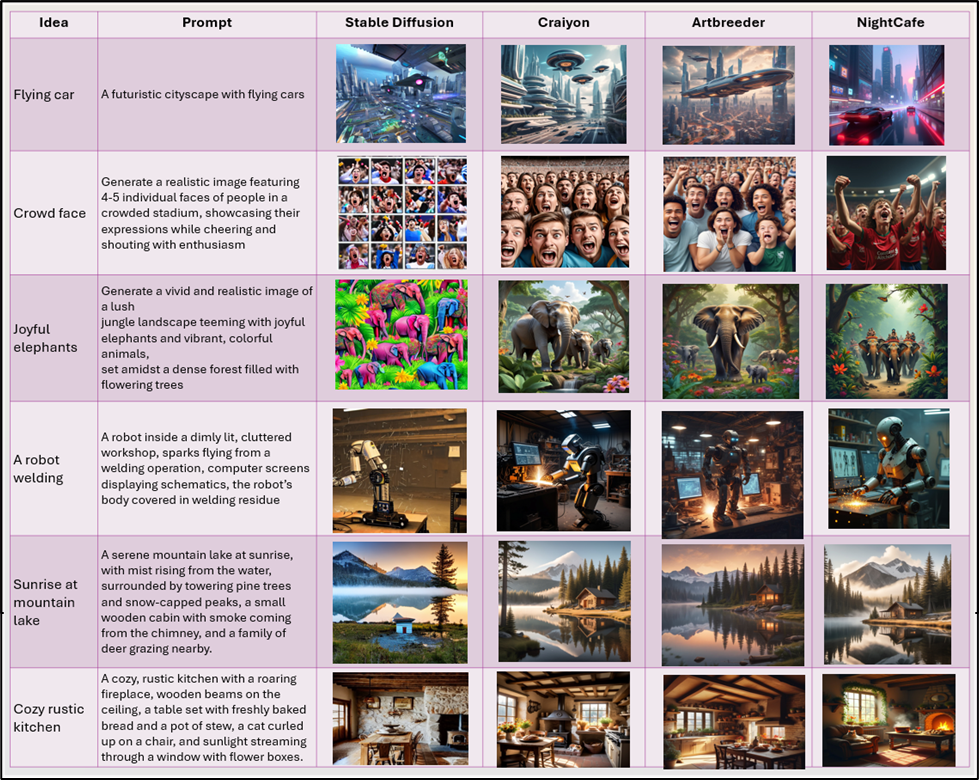

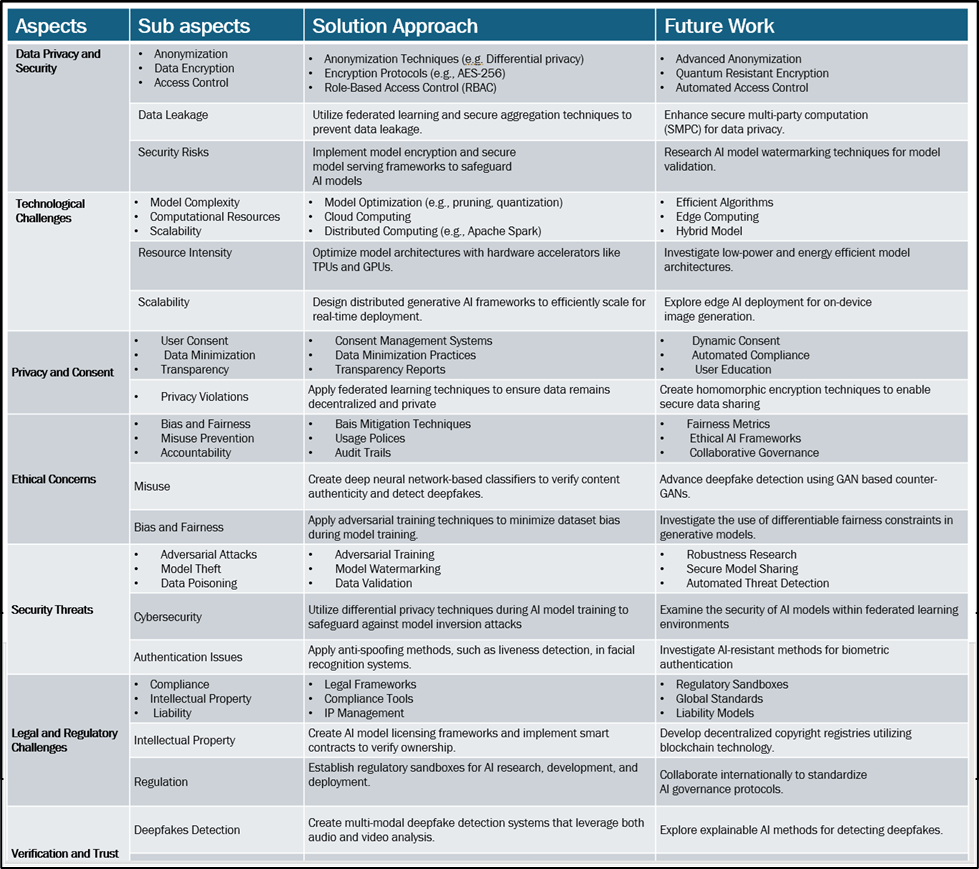

The table below represents the performance comparison

Cost and Pricing Models for Businesses

Overview of Pricing Structures in AI Tools

Understanding the cost structures of AI tools like Chat GPT and Deep SeekAI is crucial for researchers. Chat GPT operates on a subscription-based model with different usage tiers, allowing users to pay based on their needs. This can be especially beneficial for academic environments with limited and sporadic funding, as it offers flexibility and helps forecast expenses for better budget allocation. In contrast, Deep SeekAI combines subscription and licensing fees, targeting enterprise-level applications. Although this may result in higher upfront costs, it provides customization and scalability, which can be valuable for organizations seeking comprehensive solutions.

Additionally, researchers need to be aware of potential extra costs, such as data storage fees, premium features, and technical support. Both tools may have usage limits, leading to overage charges if exceeded. Evaluating these hidden costs is essential to understand the total cost of ownership and manage the overall budget effectively. When choosing between Chat GPT and Deep SeekAI, researchers must consider not only the pricing structures but also factors like response speed, efficiency, and training data quality to make informed decisions that align with their project objectives.

Training Data and Model Transparency

Importance of Training Data in AI Models

Training data is the foundation of AI models, influencing their ability to generate coherent and contextually relevant responses. For Chat GPT and Deep SeekAI, the quality, diversity, and volume of training data are critical for performance.

The below shows the table wise comparison

Data Sources for Chat GPT

Chat GPT’s effectiveness relies on diverse and high-quality training data sourced from books, websites, and various written materials. This extensive dataset allows Chat GPT to learn language patterns, nuances, and contextual meanings, resulting in coherent and contextually relevant responses across multiple topics. However, the lack of transparency regarding specific datasets and sources raises concerns about potential biases and accountability.

Data Sources for Deep SeekAI

Deep SeekAI utilizes a specialized approach to data sourcing, focusing on domain-specific knowledge from academic journals, research papers, industry reports, and real-time databases. This targeted approach enhances its ability to generate accurate and contextually appropriate responses in specialized fields like medicine, technology, and law. Deep SeekAI’s transparency about its data sources fosters trust, making it a reliable tool for researchers seeking precise and reliable outputs in niche areas.

Comparison

- Chat GPT: Broad dataset, versatile responses, concerns about transparency and bias.

- Deep SeekAI: Specialized datasets, accurate in niche areas, transparent about data sources.

Transparency in Model Training

Transparency in AI model training is crucial for evaluating performance, reliability, and ethical implications.

Chat GPT:

- Developed by OpenAI with diverse datasets from the internet.

- General principles of training are shared, but specific datasets are undisclosed.

- Emphasizes diverse data to enhance text generation but raises concerns about potential biases and limitations.

Deep SeekAI:

- Provides explicit information about training data and methodologies.

- Detailed documentation allows researchers to understand biases and strengths.

- Openness about training framework aids reproducibility in research.

Implications:

- Transparency in training methodologies impacts response speed and efficiency.

- Understanding training processes helps researchers choose models that align with ethical considerations and performance needs.

Use Cases and Applications

The below table represents the comparison of Applications & use cases of DeepseekAI and chatGPT

Conclusion and Future Directions

Conclusion

Future Trends in Conversational AI

Future trends in conversational AI will significantly transform the landscape, especially with models like Chat GPT and Deep SeekAI competing for supremacy. Advancements in natural language processing and machine learning will result in more sophisticated conversational agents with optimized algorithms for real-time interactions and minimal latency. The integration of multimodal capabilities will be crucial, allowing these AI systems to process text, voice, and visual data seamlessly, thereby enhancing user experience and application versatility.

Flexible cost and pricing models will emerge to cater to varying business scales, with pay-per-use and scalable subscription services becoming more common. Transparency in model training will be vital, with clear communication about data sources and training methodologies driving user trust and adoption. Researchers will need to focus on how Chat GPT and Deep SeekAI address these transparency concerns.

Continuous improvements in training methodologies will lead to more robust and adaptable models. Efficient transfer learning methods will allow AI agents to learn from smaller datasets while achieving high performance, making conversational AI a pivotal tool across various domains. These trends will shape the future of conversational AI, enhancing capabilities and accessibility.

Final Thoughts on Chat GPT vs. Deep SeekAI

In summarizing the comparison between Chat GPT and Deep SeekAI, it’s important to acknowledge the unique strengths and weaknesses of each system for researchers.

Chat GPT excels in generating coherent and contextually relevant responses across diverse topics, making it particularly useful for drafting documents, brainstorming ideas, and engaging in conversational simulations. In contrast, Deep SeekAI focuses on information retrieval and analytical reasoning, offering precise insights by sifting through extensive datasets, ideal for researchers who prioritize data-driven conclusions.Response generation speed and efficiency are critical considerations. Chat GPT typically delivers rapid response times, advantageous in fast-paced research environments. Conversely, Deep SeekAI’s comprehensive analysis may result in slightly longer response times but often leads to more accurate and detailed outputs. Researchers must balance the need for speed against the depth of analysis required for their specific projects.

Cost and pricing models significantly differentiate the two platforms. Chat GPT’s subscription-based model offers flexibility for various budgets, making it accessible to individual researchers and larger institutions. Deep SeekAI’s usage-based pricing model can lead to higher costs for extensive data queries, requiring researchers to consider funding constraints and project requirements.

Training data and model transparency are crucial factors. Chat GPT’s diverse dataset enhances its versatility, but the lack of transparency regarding specific training data may raise concerns. Deep SeekAI emphasizes clarity about its data sources and methodologies, enhancing trust and reliability. For researchers involved in sensitive or ethical inquiries, understanding the underlying data and model training is essential for maintaining rigorous academic standards. Ultimately, the choice between Chat GPT and Deep SeekAI depends on researchers’ specific needs and priorities. Chat GPT offers speed and versatility, while Deep SeekAI provides depth and transparency in data analysis. Each system caters to different research aspects. As AI technology evolves, researchers must stay informed about these tools’ capabilities and limitations. This knowledge allows them to make strategic decisions. These decisions enhance their work and contribute meaningfully to their fields of study.

Vote of thanks

I extend my sincere gratitude to Dr. Ranjith Gopalan and aimldatascienceacademy. He took valuable time to review this article and provided feedback. This feedback helped enhance its quality and impact.